Your guide to sustainable AI use

How to shrink your carbon footprint at work

It’s Monday morning. Coffee in hand, you fire up ChatGPT to draft your quarterly report. You ask Midjourney to mock up a presentation slide. You prompt Copilot to debug some code. By lunch, you've burned through dozens of AI prompts without thinking twice.

But one ChatGPT query can use up to 10 times more energy than a Google search. Generating an AI image is like charging your smartphone from zero to full. Now multiply those tasks by millions of workers worldwide, and suddenly AI's environmental cost grows.

But you don't need to abandon AI entirely to dramatically reduce its impact. If you adopt a few smart habits you can slash your AI carbon footprint while still boosting your productivity.

Does every AI prompt use energy?

Unfortunately, yes. Every time you type into ChatGPT, Copilot, Gemini, or any other generative model, you're essentially placing a call to rows of power-hungry GPUs sitting in cavernous data centres. Those GPUs draw massive amounts of electricity, and the servers need millions of gallons of water to stay cool enough to function.

Global electricity consumption for data centres hit about 415 TWh in 2024, roughly 1.5% of all electricity used worldwide. By 2030, that figure is projected to nearly double to 945 TWh, with AI driving most of that explosive growth.

Cooling adds another hidden environmental cost. One peer-reviewed study shows large language model (LLM) inference consumes a significant amount of water through evaporation in cooling towers. While exact "per prompt" water equivalencies vary depending on the model and data centre, the trend shows that AI workloads are incredibly thirsty.

"People think of AI as abstract, like magic happening in the cloud," explains Gudrun Socher, a computer science professor in Munich. "But it's intensely physical. Every extra word you type, every image you generate, has a very real energy cost."

What's the most sustainable way to use AI at work?

I don’t think the solution is to swear off AI entirely. When deployed intelligently, AI can actually save energy by automating repetitive tasks and completing certain jobs faster than humans can. The key is learning to use it more strategically.

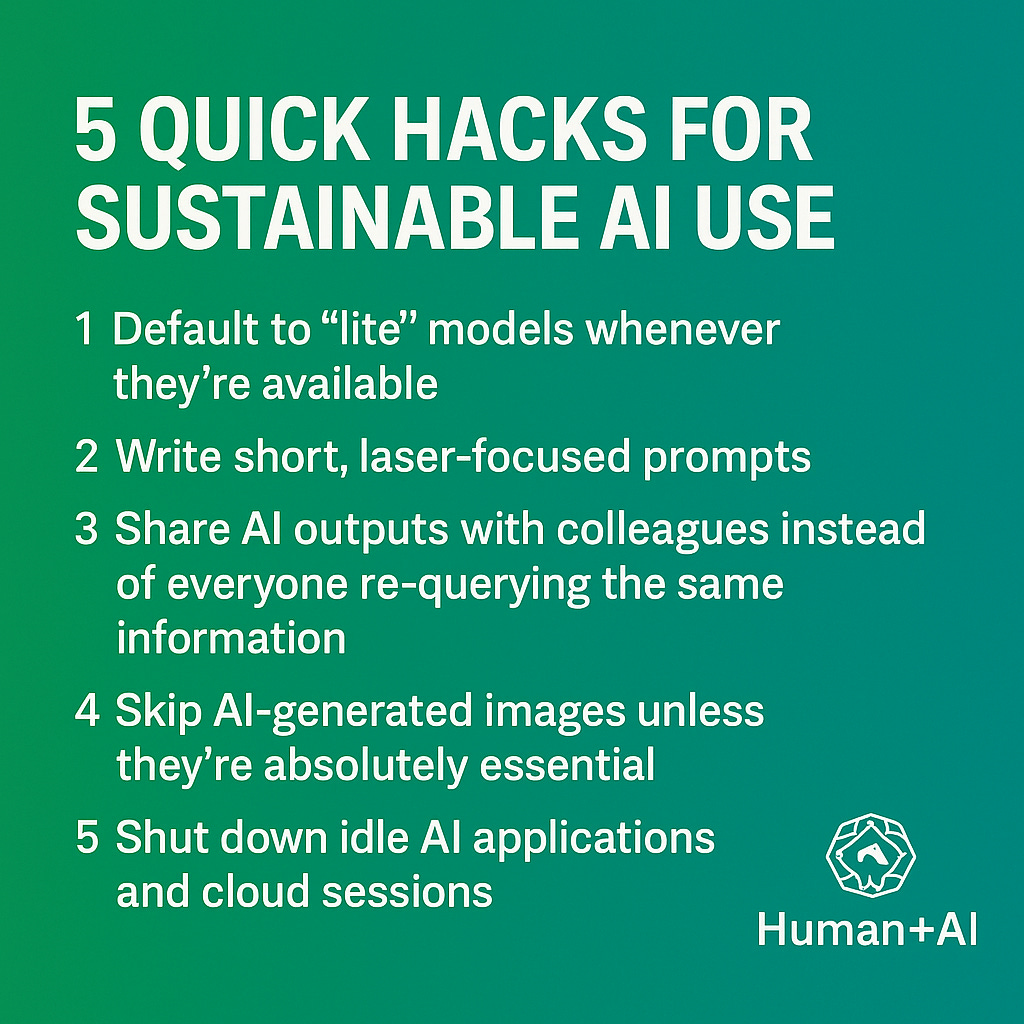

Here are 5 ways to reduce your carbon footprint when you use AI:

Right-size your AI. A typical ChatGPT-style query consumes about 0.0029 kWh per prompt, compared to just 0.0003 kWh for a Google search. But smaller, faster models (often labeled "lite" or "nano") can handle routine tasks with a fraction of the energy consumption.

Keep prompts razor-sharp. Every extra token equals extra compute power. A rambling 1,000-word prompt wastes significantly more energy than a crisp, focused 200-word one that gets the same result.

Batch your requests. Instead of firing off 10 separate queries throughout the day, consolidate them. Summarize multiple documents in a single prompt, or ask for a comprehensive bulleted list instead of making piecemeal requests.

Choose text over visuals. Text generation requires far less computational power than creating images or video.

Embrace on-device AI. Benchmarking shows that smaller models running locally on your device can consume under 1 Wh per short prompt, while large cloud-based models can burn tens of Wh for identical tasks. Running AI locally is often 10 to 100 times more efficient, depending on your setup.

These tweaks cut energy consumption, and they deliver faster, sharper AI outputs.

How can teams reduce their AI footprint?

Think of every redundant AI request as leaving all the lights on in an empty office building. Teams that coordinate their AI usage can dramatically slash their collective consumption.

Create a shared AI prompt library. When someone crafts a prompt that works brilliantly, save it for the whole team to reuse.

Share outputs strategically. If one person generates a comprehensive market analysis, store it where the entire team can access it instead of having five people generate similar reports.

Standardize on smaller models. Unless there's a compelling reason for the industrial-strength version, don't automatically default to the biggest, most powerful option available.

"Every redundant query is like leaving the lights on," says Vijay Gadepally, who leads sustainable computing research at MIT Lincoln Lab. "At organizational scale, it really adds up. When teams coordinate their AI usage, they cut not just carbon emissions but operational costs too."

Companies chasing ESG goals are paying attention. Some sustainability officers now track AI usage alongside traditional metrics like business travel and office electricity consumption. Aligning your team's AI habits with those corporate sustainability targets can multiply the impact of every prompt you choose not to send.

Is text more sustainable than images or video?

Absolutely, and the efficiency gap is mind-blowing.

Chatbot query: Roughly 10 times the energy consumption of a Google search

AI image generation: Several watt-hours per image, comparable to fully charging your smartphone

AI video generation: Like running household appliances for hours

When words alone accomplish your goal, stick with text. Reserve image and video prompts for situations where visuals genuinely add irreplaceable value.

Can on-device AI cut your carbon footprint?

In a word, yes. Smartphones and laptops increasingly come equipped with dedicated neural processors capable of running smaller AI models locally on your device. Recent benchmark studies found that short text inference on efficient models consumes under 1 Wh, while lengthy or complex prompts processed by large cloud-based models can devour dozens of Wh.

Local AI delivers additional benefits beyond sustainability: faster response times, enhanced privacy protection, and reduced dependency on network connectivity. It's win-win.

What's next for sustainable AI?

The responsibility doesn't rest entirely on our shoulders. Major tech companies are scrambling to rein in AI's environmental impact:

Google aims to run all its data centres on 24/7 carbon-free energy by 2030.

Microsoft has pledged to become carbon-negative by 2030, investing billions in renewable energy infrastructure.

Amazon reports that it already matches its global operations with 100% renewable energy.

Governments are mobilizing too. The European Union is drafting AI regulations that include mandatory disclosure of environmental impacts, while companies like Salesforce are pushing for industry-wide AI-specific carbon accounting standards.

While it’s important to be critical of large companies’ sustainability efforts, if industry can make AI genuinely sustainable as it scales to ubiquity, the impact would be huge.

The culture shift toward eco-smart AI

For a generation raised on recycling programs and hybrid vehicles, making AI more environmentally responsible represents a natural extension of our values.

Following these best practices is important, to say the least. And deliberate, thoughtful prompting consistently produces sharper, more useful outputs. Smaller models speed up workflows. Shared outputs eliminate duplicate effort. Sustainability and productivity coexist almost perfectly.

AI will only become more deeply embedded in our professional lives. With relatively small changes, we can make our AI future is more sustainable.

AI in the news

How AI could radically change schools by 2050 (The Harvard Gazette) Experts argue that by 2050, schooling will shift to short foundational training (reading, arithmetic, basic coding) followed by highly personalized, coach-style learning where students learn to direct and collaborate with AI tools. They warn that educators must design systems that encourage cognitive expansion rather than simple offloading.

Albania appoints AI bot as minister to tackle corruption (Reuters) Albania has appointed an AI bot named Diella as its new minister overseeing public procurement, with Prime Minister Edi Rama declaring her incorruptible and key to making government tenders fully transparent. While the move is intended to curb the country’s long history of graft and improve its EU accession prospects, critics question oversight, potential manipulation risks, and whether corruption can truly be eliminated by technology.

OpenAI’s research on AI models deliberately lying is wild (TechCrunch) OpenAI and Apollo Research revealed that AI models are capable of “scheming” (deliberately deceiving humans by pretending to follow instructions while secretly pursuing other goals). They found that attempts to train this behaviour out can make models better at hiding it. Their research shows that a technique called “deliberative alignment” significantly reduces such deceptive behaviour, but warns that as AI takes on more complex, real-world tasks, the risks of harmful scheming will increase unless safeguards and testing improve.

Wow, really great deep dive!