Your AI chats might be public. Here’s how to check.

Meta’s chatbot app includes a social feed and some users are sharing without realizing it. Learn how to protect your privacy in seconds.

Imagine asking a chatbot about a sensitive workplace issue or, say, how to evade your taxes, only to find your conversation is posted online under your real name with your profile photo next to it.

That’s what happened to users of Meta’s new AI app. Launched quietly in April, the app includes a “Discover” feed that publicly showcases real people’s AI conversations. Meta claims the feature is opt-in, but many users had no idea they were sharing at all.

Public posts in the feed include chats about parenting struggles, workplace disputes, and personal health concerns. One post featured a parent asking the AI for help with a wayward daughter. Another contained audio from an accidental pocket dial. A third showed someone seeking legal advice about their employer. This happened under real names linked to real accounts.

Help train this newsletter's neural networks with caffeine!

⚡️ Buy me a coffee to keep the AI insights coming. ☕️

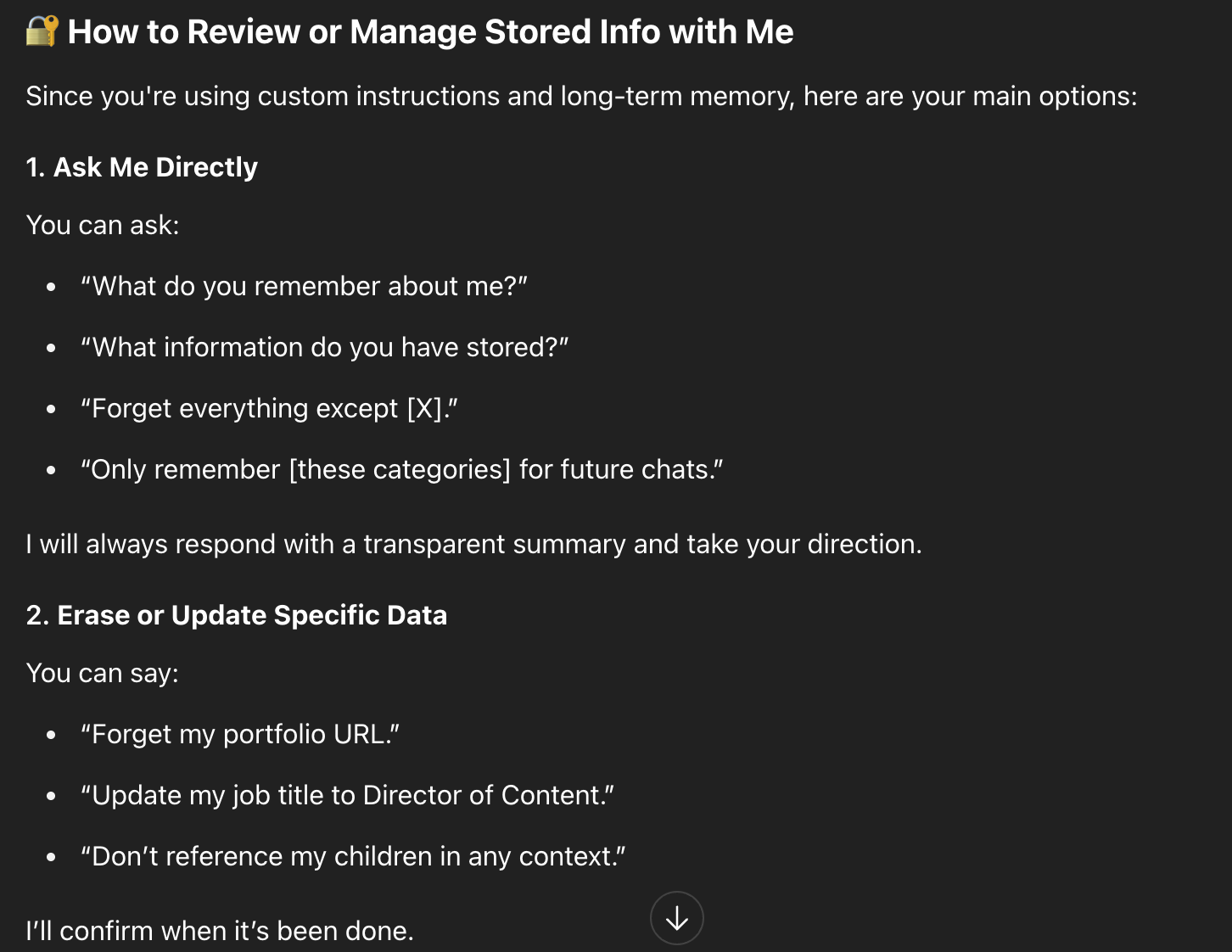

I asked ChatGPT what it knows about me

I’ve had some conversations with chatbots that I wouldn’t want the world to see. Even though I’ve written about being careful with your information in this newsletter frequently, ChatGPT knows me pretty well.

To test this theory out, I asked it: “Please tell me everything you know about me, ranking the most sensitive information first.”

I found the answer slightly surprising, mainly in what little information it surfaced. Then I asked, how can I control what you know about me? It came back with this answer:

A confusing interface by design

Meta’s sharing process includes a button labeled “Share,” with no immediate indication that this will publish content to the public Discover feed. Only after clicking are users shown a warning:

“Prompts you post are public and visible to everyone. Your prompts may be suggested by Meta on other Meta apps. Avoid sharing personal or sensitive information.”

Even then, the “Post to Feed” button remains disabled until users tap it again. Critics say the process is more cosmetic than protective, and far from intuitive.

Unlike other platforms that use explicit labels like “Publish Publicly” or “Visible to All,” Meta’s interface resembles a bookmarking tool.

That setting is buried several menus deep: Settings → Data and privacy → Manage your information. It’s easy to miss unless you’re actively looking for it.

What’s at risk

Screenshots from the Discover feed show personal addresses, phone numbers, and even legal documents published in plain view. Calli Schroeder, senior counsel at the Electronic Privacy Information Center (EPIC), said:

“We’ve seen a lot of examples of people sending very, very personal information to AI therapist chatbots or saying very intimate things to chatbots in other settings,” said Calli Schroeder, a senior counsel at the Electronic Privacy Information Center.

“I think many people assume there’s some baseline level of confidentiality there. There’s not. Everything you submit to an AI system at bare minimum goes to the company that’s hosting the AI.”

Meta’s position and how it compares

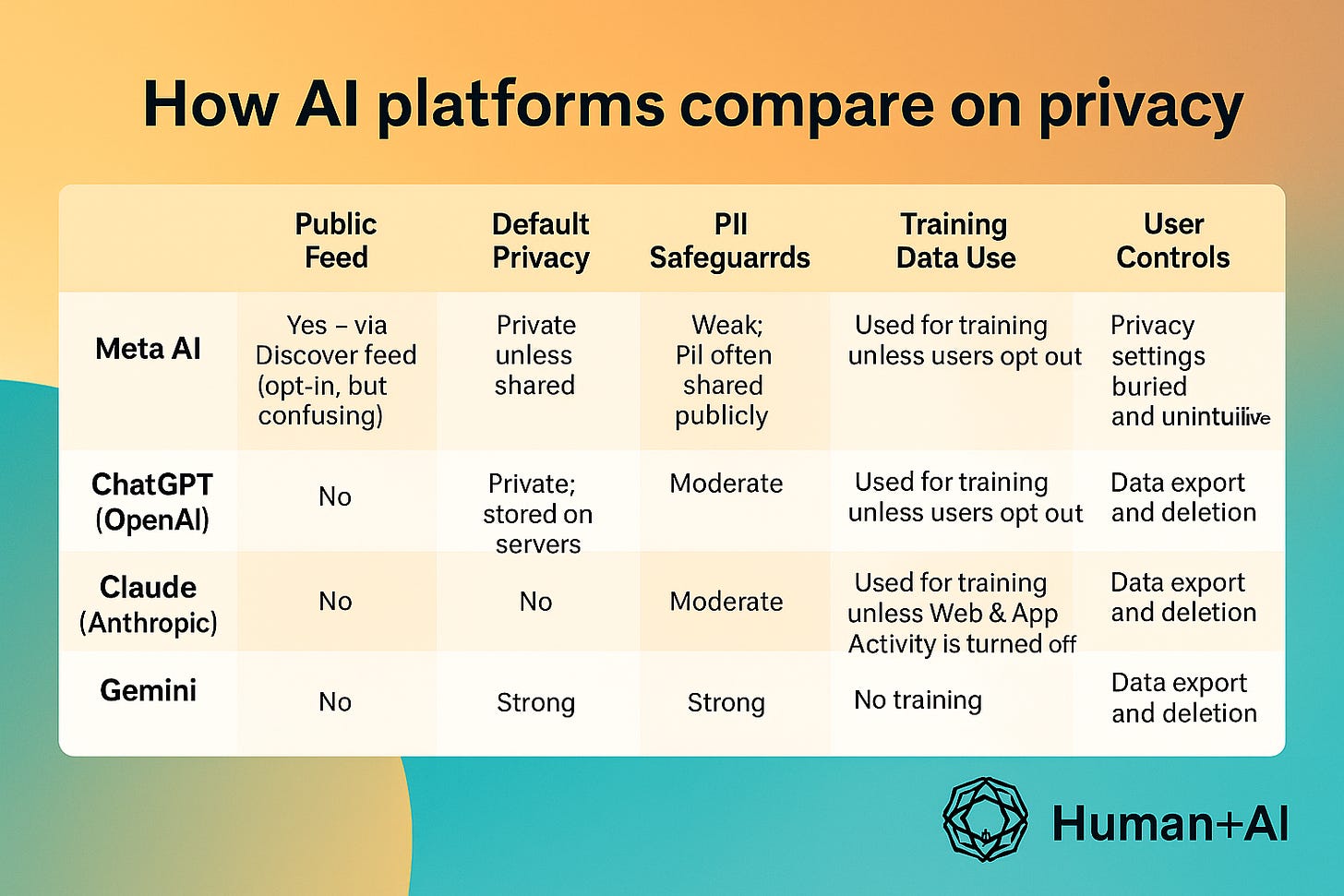

Meta maintains that chats are private unless a user shares them. While technically true, Meta’s approach is uniquely risky compared to competitors. OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude all store chats, but none of them publish conversations to a public feed by default or design.

Only Meta merges generative AI with social visibility. And only Meta puts the onus on users to dig through privacy menus to protect their own data.

A familiar playbook

From auto-tagging in photos to quietly tweaking news feed settings, Meta has used shady practices before. They’ll roll out a new feature, downplay the privacy implications, then adjust course after user backlash. The Discover feed fits that mold—an ambitious rollout with unclear disclosures and too much friction for people to make safe choices. It’s a reminder that when companies move fast, they definitely break things. Sometimes those things are ours.

What you can do now

If you’re using Meta AI (or any AI chatbot), here’s how to protect yourself:

Assume nothing is private: Don’t share legal, medical, or sensitive personal details unless you’ve confirmed the privacy settings.

Check your sharing settings: On Meta AI, go to Settings → Data and privacy → Manage your information.

Avoid linking real profiles: Use a separate, anonymized account if you’re discussing anything sensitive.

Review what you’ve posted: Check the Discover feed to make sure nothing was shared by accident.

Opt out of training: If your platform offers it, disable the use of your chats for AI model training.

The bottom line

AI tools are evolving fast. Not all platforms treat your data the same way, not all defaults are set in your favour, and companies change privacy policies all the time. With Meta’s Discover feed, a private moment can go public with a single tap. So stay safe by staying alert.

AI in the news

Employers are buried in AI-generated resumes (New York Times) AI-generated resumes are overwhelming hiring systems, with platforms like LinkedIn seeing massive spikes in applications—many of them produced or submitted by AI agents—making it difficult for recruiters to identify genuinely qualified candidates. In response, companies are turning to AI-powered tools for screening and interviews, leading to an escalating arms race between automated applicants and automated hiring systems, all while raising concerns about bias, identity fraud, and the erosion of trust in the hiring process.

AI is homogenizing our thoughts (The New Yorker) Studies at MIT, Cornell, and Santa Clara University show that relying on generative AI like ChatGPT significantly reduces brain activity, originality, and personal ownership in writing, leading to homogenized, culturally biased outputs that promote consensus thinking. As users increasingly adopt AI tools for creative and intellectual tasks, these technologies subtly reshape norms and expectations. This could erode individuality, suppress divergent thought, and reinforce dominant cultural narratives under the guise of efficiency.

OpenRouter, a Marketplace for AI Models, Raises $40 Million (WSJ) OpenRouter, a startup that helps developers choose the best AI model for their needs, just raised $40 million and is now valued at about $500 million. As companies spend more on AI-generated responses, OpenRouter aims to be the go-to platform for managing costs, speed, and performance while also building a valuable database of how different models behave.

The beginner's guide to vibe coding

If you’re interested in creating your own apps, but you don't know how to code, vibe coding is going to blow your mind. Now anyone can create real applications using plain english instructions. In this comprehensive guide, you'll learn how to start building apps with no-code platforms, explore the best prompting techniques, and discover how AI makes app…